Via Megan McArdle: the New York times and the Washington Post are reporting on recent problems stemming from the Obama Administration’s Healthcare-data project. Apparently data analysts studying health impacts of new programs are not controlling their experimental samples. Whereas ideally the government’s analysis would be the basis for crafting intelligent policy, the New York Time’s description calls into question the robustness of the research being conducted.

“The studies that are regarded as the most reliable randomly assign people or institutions to participate in a program or to go on as usual, and then compare outcomes for the two groups to see if the intervention had an effect.

Instead, the Innovation Center has so far mostly undertaken demonstration projects; about 40 of them are now underway. Those projects test an idea, like a new payment system that might encourage better medical care — with all of a study’s participants, and then rely on mathematical modeling to judge the results.”

The superficial approach described above is odd because it seemingly flies in the face of conventional approaches statistical modeling. For those not familiar, establishing a randomized control is essential to getting results that don’t just confirm the hypothesis is being tested. You can see this problem in the infamous Israeli Air Force Study(a really informative overview of this concept can be found on YouTube), and it’s been a long standing statistical understanding that, when possible, randomized control samples are always preferable.

So why do government analysts feel so confident that they can dispense with what has, until recently, been an essential feature in any statistical experiment? Well because they’ve got great data-mining technology! Here, the word “mathematical modeling” does a lot of work in obscuring the real methods that the government is using. Mathematical modeling can really mean anything, and ironically the NYT’s link on this description is broken.

Megan McArdle, has a good take on the possible sources of the mistake: sloppy thinking on the part of federal bureaucrats. Says McArdle:

Gold’s article implies that the administration is looking at gross savings — which is to say, it’s just reporting the amount of money saved by the accountable-care organizations that ended up on the positive side of the ledger, even though this is less than half the total. Statisticians have a term for this: the Texas sharpshooter fallacy…..

Perhaps, I may be even more cynical than McArdle, but my take is somewhat different.

Given that the administration has been unable to produce evidence of healthcare savings from increased coverage, it is fair to say that the president is feeling pressure to come up with some statistical result that will make costs appear more reasonable (at least ahead of the next CBO estimate). Moreover, without speculating too much as to the overall structure of bureaucratic management, I don’t think it is unlikely that individual analysts are also feeling the pressure to deliver “good” results, especially with all of these cool new “big data” tools so prominently featured in the news.

The result is predictable: a sort of magical thinking arises where data-mining and complex models become panacea for turning poorly conducted statistical tests into predictive models showing large savings from new “innovative” approaches to delivering healthcare. Of course the results are all confirmation bias, but who’s going to look a gift horse in the mouth? Certainly not an administration desperate for good news on the healthcare front.

Now admittedly, I have no inside information, but if this kind of sloppy analysis is indeed going on then it is certainly a cause for concern. The one-sided use of over-optimistic healthcare predictions could lead the CBO to perennial underestimate the cost of supporting programs like Medicare in their current state. This in turn could ultimately doom these program’s long-term solvency (not to mention the long term solvency of the country) since politicians are all too willing to forgo necessary reform in the light of CBO reports that tell them healthcare costs will come down on their own accord.

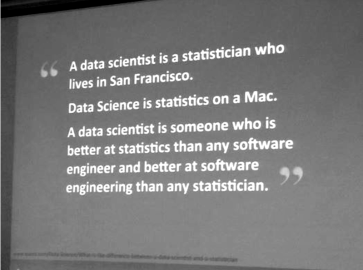

But ultimately this problem is not political. It stems from a cultural approach to data analysis that is far too prevalent in industry and in government. I like to think of it as a modern day Rupelstilskin story. What do we have? Reams of uncontrolled data. What Do We Want? Optimistic predictive results. With this point of view, it’s tempting to simply lock analysts in a room and ask them to build mathematical models until they finally manage to spin the straw data into golden predictive models like the miller’s daughter from the aforementioned fairy tale.

But just as in the fairytale, when we force someone to spin straw into gold, it shouldn’t be surprising when magical methods play a large role in their process. Moreover, in the case of the government’s own analysis the Rupelstilskin metaphor can be taken yet further. For in trusting their magic numbers, our current leaders may have put the next generation on the line for the results.